· Hersh Kshetry · Engineering Insights · 6 min read

How to Leverage AI in EPC Workflows

Not Hype, Table Stakes: Deploying Deterministic, Auditable AI Workflows in Engineering

Ever since ChatGPT launched in research preview, Puran Water has been experimenting with LLMs and attempting to leverage AI in engineering workflows . Initially, the use cases were limited to research-related tasks. Helpful, but not transformative. The real breakthrough came with custom tool calling, which opened up a seemingly limitless universe of applications for any workflow that can be done sitting at a desk.

In EPC workflows, the most critical requirements for any automation or streamlining effort are that processes must be (1) deterministic and (2) auditable. Out-of-the-box Large Language Models (LLMs) fail to meet these criteria. Their responses vary with each prompt, and their reasoning is opaque. However, with tool-calling LLMs and properly structured workflows, achieving deterministic and auditable engineering automation is now within reach for domain experts.

A Tested Approach to AI-Enabled Engineering

1. Leverage Software Engineering AI Agents

Start with SWE (Software Engineering) AI agents. One option is Anthropic’s Claude Max plan, which offers incredible value for the cost with Claude Code. These agents can help you rapidly develop the infrastructure needed for your engineering workflows.

2. Create MCP Wrappers for Repetitive Tasks

For every task performed more than a few times e.g., running an engineering template, generating a datasheet, performing standard calculations, develop an MCP (Model Context Protocol) wrapper to expose it as a tool to LLMs. While there are several ways to expose tools to LLMs, we’ve decided to build tools as MCP servers. This allows the tools to be compatible across clients. The MCP server can be built and maintained at one place and leveraged by any MCP client. Any LLM can leverage these tools as long as the model provider supports the protocol. MCP is gaining widespread adoption across leading AI companies, making it a stable foundation for tool development with minimal risk of becoming outdated. Anthropic introduced MCP as an open standard, and it has since received support from OpenAI, Microsoft, and Google. This approach has several advantages:

- For engineering templates: Developing a script to replace legacy Excel or MathCAD templates becomes extremely easy, even for non-programmers who can still read and understand code with SWE AI agent assistance

- For engineering documentation: A Papermill-Jupyter Notebook approach can parameterize documents (similar to MathCAD) while making them easily accessible by LLMs via the MCP protocol

3. Build Agent-Driven Workflows

For workflows performed repeatedly, implement an agent framework (like Agno) to automate these workflows with agents leveraging the MCP servers. This reduces human involvement to simply initiating the workflow and reviewing outputs. An alternative approach is to create parameterized Jupyter notebooks that encapsulate the full workflow (or logical chunks of it) and expose these notebooks as tools via MCP.

4. Avoid Over-Engineering

Resist the temptation to start ambitious projects. I made the mistake of attempting to create MCP servers flexible enough to perform 90% of common fluids, heat transfer, or water chemistry calculations a process engineer in my field would typically do. The open-sourced MCP servers below are a result of this effort, but the approach was flawed.

It’s far better to build incrementally, in parallel with your current workflow, so that:

- You can rigorously test the tool-calling LLM output against your current tools to ensure proper functioning

- You don’t over-build tools that never get used in actual workflows

Puran Labs - MCP Server Demonstrations

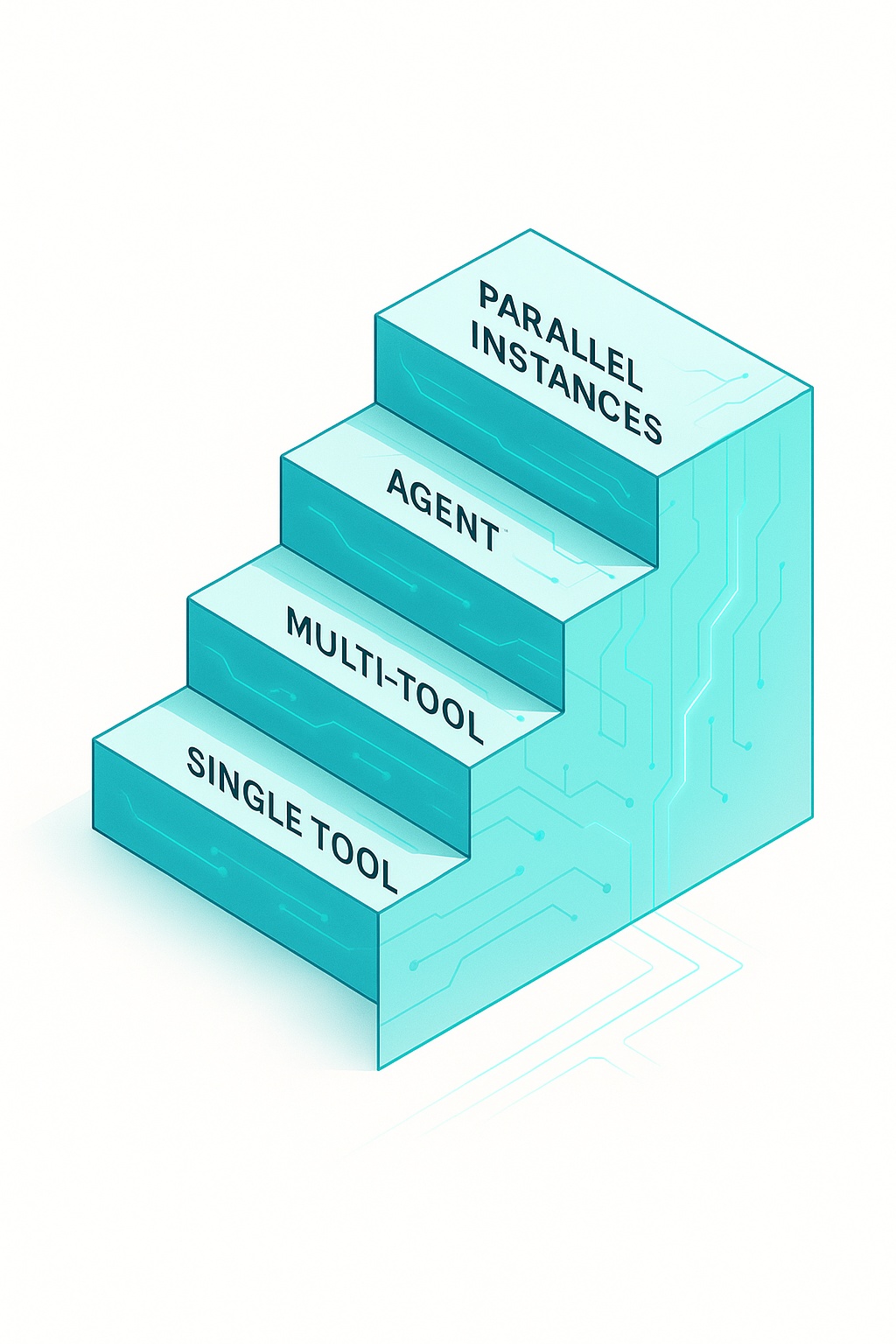

The MCP server demonstrations use tool-calling LLMs through a chat interface. While this format illustrates the core functionality, it represents the least powerful implementation of these systems. Chat interfaces inherently constrain LLMs to a single-threaded, human-paced interaction—essentially reducing them to interactive assistants.

The true power of tool-calling LLMs emerges when deployed in automated workflows that can:

- Execute multiple instances in parallel without human bottlenecks

- Process batch operations at scale

- Chain complex multi-step calculations automatically

- Generate auditable outputs for both human and AI review

Despite these limitations, the chat interface effectively demonstrates key advantages over standard LLMs:

- Deterministic results: Each prompt produces exactly the same output (test this by running the same prompt multiple times)

- Auditable results: Click tool call badges to inspect the exact parameters passed and results returned

When combined with the Papermill-Jupyter Notebook approach, these tool-calling agents create a complete audit trail that can be verified by both human engineers and AI systems.

Deterministic vs. Probabilistic Results Comparison

To illustrate the critical difference between tool-calling and standard LLMs, consider this engineering calculation:

Prompt: Size a pump to move 750 GPM of wastewater from a wet well 8 feet below grade to a treatment tank 25 feet above grade. The system uses 8-inch Schedule 40 steel pipe with 150 feet of suction line and 300 feet of discharge line. The system includes the following fittings:

Suction Line Fittings:

- 1 sharp entrance at wet well

- 2 × 90° elbows

- 1 gate valve

Discharge Line Fittings:

- 4 × 90° elbows

- 2 × 45° elbows

- 1 swing check valve

- 1 gate valve

- 1 normal exit at treatment tank

Calculate the pump’s total dynamic head (TDH) and NPSH available.

| Tool-Calling LLM (Claude Sonnet 4 with Fluids MCP Server) | Standard LLM (Claude Sonnet 4 without tools) |

|---|---|

| Run 1: TDH = 38.3 feet, NPSHA = 23.6 feet Run 2: TDH = 38.3 feet, NPSHA = 23.6 feet Run 3: TDH = 38.3 feet, NPSHA = 23.6 feet | Run 1: TDH = 40.0 feet, NPSHA = 23.3 feet Run 2: TDH = 41.0 feet, NPSHA = 22.4 feet Run 3: TDH = 51.6 feet, NPSHA = 19.5 feet |

Analysis: The tool-calling LLM produces identical results across all runs because it uses deterministic engineering calculations through the MCP server. Each calculation follows the same methodology, uses identical fluid properties from CoolProp, and applies consistent friction factors. The standard LLM, despite being highly capable, produces different results on each run due to its probabilistic nature: variations in assumed values, calculation methods, and rounding approaches lead to substantially different engineering outcomes.

Practical Implementation Tips

Start Small: Begin with a single, well-defined workflow that you perform regularly. Build an MCP server for just that workflow, test it thoroughly against your existing methods, and only then expand.

Focus on Auditability: Every MCP server should produce outputs that clearly show:

- Input parameters used

- Calculation methodology

- Intermediate results

- Final outputs with appropriate units

- References to standards or methods employed

Enable Parallel Workflows: The true power emerges when you can run multiple instances of a workflow simultaneously. Design your MCP servers to be stateless and thread-safe.

Integrate with Existing Tools: Don’t try to replace everything at once. MCP servers can wrap existing tools (Excel templates, Python scripts, commercial software APIs) to make them accessible to AI agents.

The Path Forward

The combination of tool-calling LLMs (and MCP servers as a convenient way to provide any MCP client access to custom tools) represents a compelling opportunity to reimagine how engineering work gets done. We’re moving from a world where engineers adapt to software interfaces to one where AI agents leverage custom tools on our behalf in autonomous workflows.

An engineer who can effectively orchestrate these agents with domain-specific tools can accomplish what previously required entire teams with the associated friction of less than perfect communication and too frequent meetings.

The key is to start with your specific workflows, build incrementally, and always maintain the deterministic, auditable nature that engineering demands.

The MCP servers discussed in this article are open-sourced and available at github.com/puran-water.